Explainable AI

Recent advances in machine learning have led to growing interest in xAI to enable humans to gain insight into the decision-making of machine learning models. However, 1) interpretable reinforcement learning remains an open challenge, and 2) the utility of interpretable models in human-machine teaming has not yet been characterized.

Reinforcement Learning (RL) with deep function approximators has enabled the generation of effective control policies across numerous applications in robotics. However, while the performance of these policies allows for autonomously learning collaborative behaviors, the conventional deep-RL policies used in prior work lack interpretability, limiting deployability in safety-critical and legally-regulated domains. Without the utilization of interpretable models, it is difficult to assess a system's flaws, verify its correctness, and promote its trustworthiness.

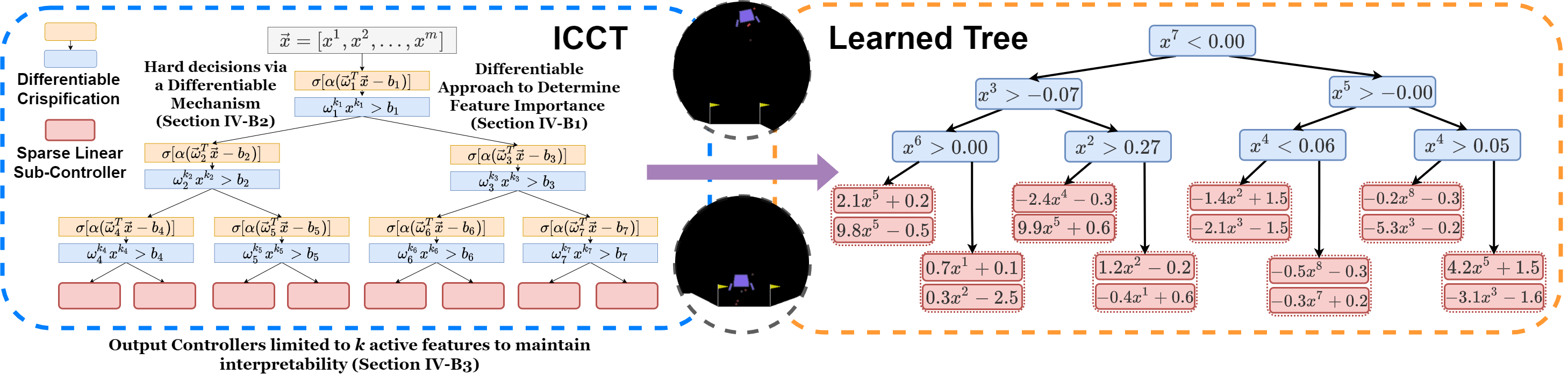

In this work, I directly produce high-performance, interpretable policies represented by a minimalistic tree-based architecture augmented with low-fidelity linear controllers via reinforcement learning, providing a novel interpretable reinforcement learning architecture, "Interpretable Continuous Control Trees" (ICCTs). I provide several extensions to prior differentiable decision tree frameworks within our proposed architecture: 1) a differentiable crispification procedure allowing for optimization in a sparse decision-tree like representation, and 2) the addition of sparse linear leaf controllers to increase expressivity while maintaining legibility.

|

Our Interpretable Continuous Control Trees (ICCTs) have competitive performance to that of deep neural networks across six continuous control domains, including four difficult autonomous driving scenarios, while maintaining high interpretability. The maintenance of both high performance and interpretability within an interpretable reinforcement learning architecture provides a paradigm that would be beneficial for the real-world deployment of autonomous systems. |

Project materials:

-

Rohan Paleja*, Yaru Niu * ,Andrew Silva, Chace Ritchie, Sugju Choi, andMatthew Gombolay. Learning Interpretable, High-Performing Policies for Autonomous Driving. Robotics: Science and Systems (RSS), 2022. - Codebase.

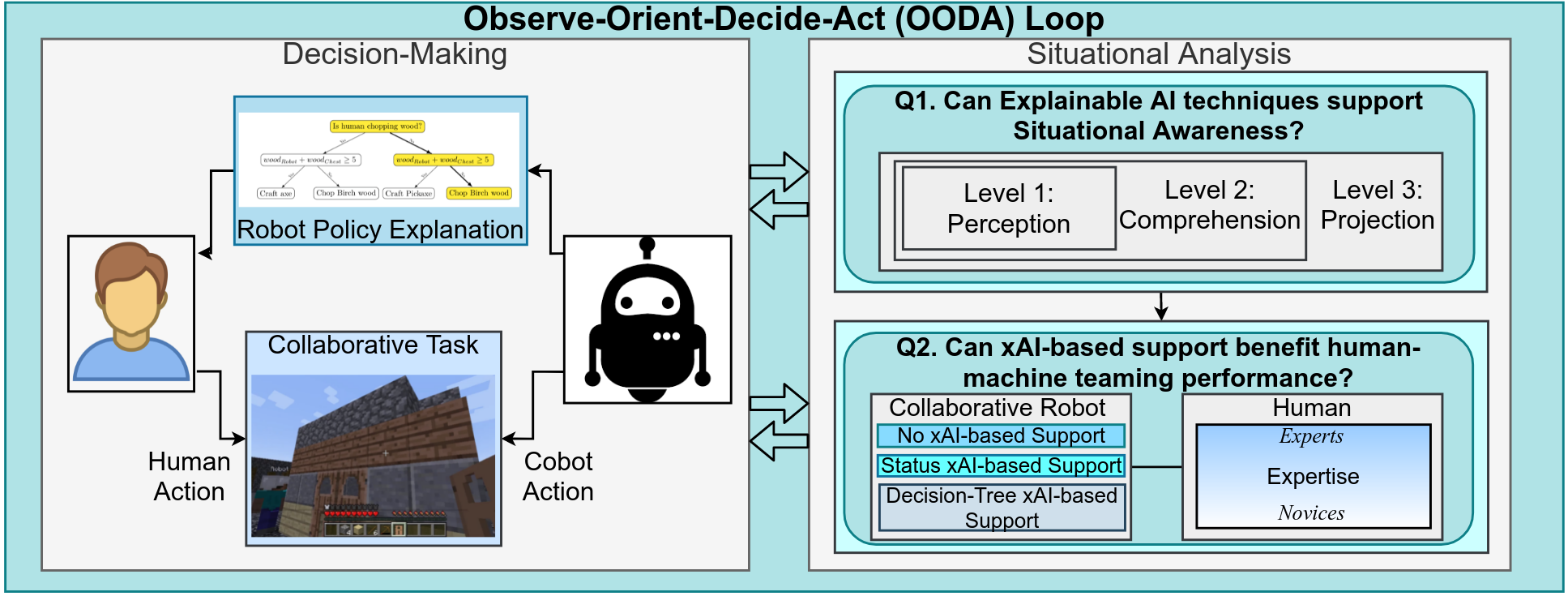

While we can now train agents that maintain interpretable models, the utility of such interpretability has not yet been characterized in human-machine teaming. Importantly, xAI offers the promise of enhancing team situational awareness (SA) and shared mental model development, which are the key characteristics of effective human-machine teams. Inspired by prior work in human factors, we present the first analyses of xAI under sequential decision-making settings for human-machine teams.

We assessed the ability for human teammates to gain improved SA through the augmentation of xAI techniques and quantified the subjective and objective impact of xAI-supported SA on human-machine team fluency. Significantly, I utilized Minecraft as a human-machine teaming (HMT) platform, creating a complex collaboration task where humans and machines must make decisions in real-time and reason about the world in a continuous space. The resultant interaction is much closer to the envisage of a real-world human-robot interaction scenario compared to prior research that has focused on point interactions (i.e., a classification problem rather than a sequential decision-making problem) and does not assess the preoccupation cost of online explanations in HMT. Importantly, I found that 1) using interpretable models that can support information sharing with humans can lead to increased SA and 2) xAI-based support is not always beneficial, as there is a cost of paying attention to the xAI and this may outweigh the benefits obtained from generating an accurate shared mental model. These findings emphasize the importance of developing the "right" xAI models for HMT and the optimization methods to support learning these xAI models. Furthermore, this study lays the groundwork for future studies studying xAI in sequential decision-making settings and brings to light important challenges that must be addressed within the fields of xAI and HRI.

Project materials:

- Talk from NeurIPS'21.

-

Rohan Paleja, Muyleng Ghuy, Nadun Ranawaka Arachchige, Reed Jensen, andMatthew Gombolay. The Utility of Explainable AI in Ad Hoc Human-Machine Teaming. Conference on Neural Information Processing Systems (NeurIPS), 2021.